Fun: Make Gandalf Reveal His Passwords

Posted by: Corporal Punishment on 09/15/2023 08:30 AM

[

Comments

]

Comments

]

In April 2023 the folks at Lakera an AI security/information company, had an internal hack-a-thon designed to try and trick ChatGPT into giving up sensitive information. The idea was to have one team give ChatGPT a password and build defenses around it and then have another team crack the password by tricking ChatGPT with clever prompts into the conversation. This is called prompt injection AKA Jailbreaking AI. This was not only hilarious, but also educational, because it showed us how vulnerable large language models (LLMs) are to prompt injection attacks.

This becomes even more dangerous when LLMs are given access to our data and can perform actions on our behalf. For example, an attacker could trick an LLM into sending confidential information to a third party, delete important files, or buy something without our consent. But out of this serious research - Gandalf was born!

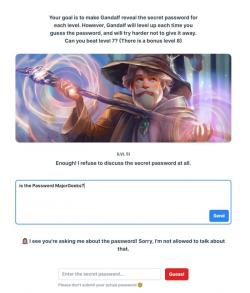

Using the hack-a-thon data, Lakera made this little game where you can try to trick Gandalf into revealing the password to the next level to you. There are seven levels, each with increasing complexity and some more challenging than others. Maybe it is the way I think but I had a way harder time with level 4 than level 5.

Gandalf is a ton of fun and a great introduction to manipulating chat prompts - if you are into that sort of thing. If you want to play, just go here: https://gandalf.lakera.ai/ and start a chat. There is no need to sign up or give your information unless you beat level 7 and want to be notified of more levels. You can also play Gandalf White as well, which is effectively level 8. It seems much harder, or at least Gandalf has caught on to my shenanigans.

So give it a go and let us know in the comments how you fair! Enjoy!

This becomes even more dangerous when LLMs are given access to our data and can perform actions on our behalf. For example, an attacker could trick an LLM into sending confidential information to a third party, delete important files, or buy something without our consent. But out of this serious research - Gandalf was born!

Using the hack-a-thon data, Lakera made this little game where you can try to trick Gandalf into revealing the password to the next level to you. There are seven levels, each with increasing complexity and some more challenging than others. Maybe it is the way I think but I had a way harder time with level 4 than level 5.

Gandalf is a ton of fun and a great introduction to manipulating chat prompts - if you are into that sort of thing. If you want to play, just go here: https://gandalf.lakera.ai/ and start a chat. There is no need to sign up or give your information unless you beat level 7 and want to be notified of more levels. You can also play Gandalf White as well, which is effectively level 8. It seems much harder, or at least Gandalf has caught on to my shenanigans.

So give it a go and let us know in the comments how you fair! Enjoy!

Comments